Google DeepMind, Google’s flagship AI research lab, wants to beat OpenAI at the video-generation game — and it might just, at least for a little while.

On Monday, DeepMind announced Veo 2, a next-gen video-generating AI and the successor to Veo, which powers a growing number of products across Google’s portfolio. Veo 2 can create two-minute-plus clips in resolutions up to 4k (4096 x 2160 pixels).

Notably, that’s 4x the resolution — and over 6x the duration — OpenAI’s Sora can achieve.

It’s a theoretical advantage for now, granted. In Google’s experimental video creation tool, VideoFX, where Veo 2 is now exclusively available, videos are capped at 720p and eight seconds in length. (Sora can produce up to 1080p, 20-second-long clips.)

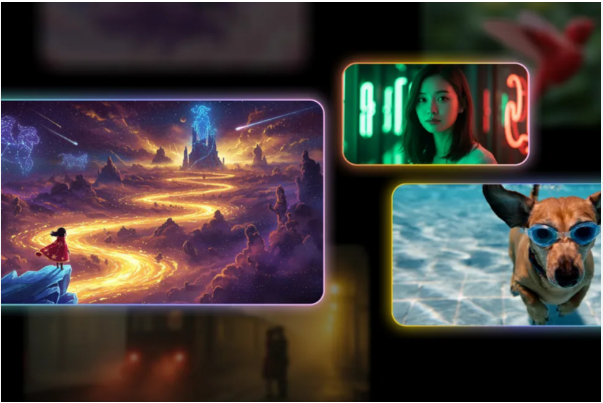

Veo 2 in VideoFX.Image Credits:Google

VideoFX is behind a waitlist, but Google says it’s expanding the number of users who can access it this week.

Eli Collins, VP of product at DeepMind, also told TechCrunch that Google will make Veo 2 available via its Vertex AI developer platform “as the model becomes ready for use at scale.”

“Over the coming months, we’ll continue to iterate based on feedback from users,” Collins said, “and [we’ll] look to integrate Veo 2’s updated capabilities into compelling use cases across the Google ecosystem … [W]e expect to share more updates next year.”

Google DeepMind, a leading artificial intelligence (AI) research organization, has recently unveiled a new video model that is designed to rival Sora, a popular video analysis tool. The new model, which is based on a deep learning architecture, is capable of analyzing and understanding video content at a level that is comparable to human performance.

Background

Video analysis is a rapidly growing field that involves the use of AI and machine learning algorithms to analyze and understand video content. This can include tasks such as object detection, tracking, and recognition, as well as more complex tasks such as activity recognition and anomaly detection.

Sora is a popular video analysis tool that is widely used in a variety of applications, including surveillance, security, and sports analytics. However, Sora has several limitations, including its inability to handle complex video scenes and its limited ability to recognize and track objects.

Google DeepMind’s New Video Model

Google DeepMind’s new video model is designed to address the limitations of Sora and other video analysis tools. The model is based on a deep learning architecture that is capable of analyzing and understanding video content at a level that is comparable to human performance.

The model uses a combination of convolutional neural networks (CNNs) and recurrent neural networks (RNNs) to analyze video content. The CNNs are used to extract features from the video frames, while the RNNs are used to model the temporal relationships between the frames.

Advantages of Google DeepMind’s New Video Model

Google DeepMind’s new video model has several advantages over Sora and other video analysis tools. Some of the key advantages include:

1. Improved Accuracy: The model is capable of analyzing and understanding video content with a high degree of accuracy, even in complex scenes with multiple objects and activities.

2. Increased Efficiency: The model is designed to be highly efficient, allowing it to analyze and understand video content in real-time.

3. Flexibility and Customizability: The model is highly flexible and customizable, allowing users to tailor it to their specific needs and applications.

4. Scalability: The model is designed to be highly scalable, allowing it to handle large volumes of video data and analyze it in real-time.

Applications of Google DeepMind’s New Video Model

Google DeepMind’s new video model has a wide range of applications, including:

1. Surveillance and Security: The model can be used to analyze and understand video footage from surveillance cameras, allowing for more effective and efficient security monitoring.

2. Sports Analytics: The model can be used to analyze and understand video footage from sports events, allowing for more accurate and detailed analysis of player and team performance.

3. Healthcare: The model can be used to analyze and understand video footage from medical procedures, allowing for more accurate and detailed diagnosis and treatment.

4. Autonomous Vehicles: The model can be used to analyze and understand video footage from autonomous vehicles, allowing for more accurate and detailed navigation and control.

Conclusion

Google DeepMind’s new video model is a significant advancement in the field of video analysis. The model’s ability to analyze and understand video content with a high degree of accuracy, even in complex scenes with multiple objects and activities, makes it an ideal solution for a wide range of applications. The model’s flexibility, customizability, and scalability also make it an attractive solution for users who need to analyze and understand large volumes of video data.

Google DeepMind’s new video model, designed to rival Sora, offers several benefits that make it an attractive solution for various applications. Here are some of the key benefits of the new video model:

Benefits for Video Analysis

1. Improved Accuracy: The new video model is capable of analyzing and understanding video content with a high degree of accuracy, even in complex scenes with multiple objects and activities.

2. Increased Efficiency: The model is designed to be highly efficient, allowing it to analyze and understand video content in real-time.

3. Enhanced Object Detection: The model’s advanced object detection capabilities enable it to accurately identify and track objects within video footage.

4. Better Action Recognition: The model’s action recognition capabilities allow it to accurately identify and classify actions within video footage.

Benefits for Various Industries

1. Surveillance and Security: The new video model can be used to improve surveillance and security systems, enabling more accurate and efficient monitoring of video footage.

2. Sports Analytics: The model can be used to analyze and understand video footage from sports events, enabling more accurate and detailed analysis of player and team performance.

3. Healthcare: The model can be used to analyze and understand video footage from medical procedures, enabling more accurate and detailed diagnosis and treatment.

4. Autonomous Vehicles: The model can be used to analyze and understand video footage from autonomous vehicles, enabling more accurate and detailed navigation and control.

Technical Benefits

1. Advanced Deep Learning Architecture: The new video model is based on a advanced deep learning architecture that enables it to learn and improve from large datasets.

2. Improved Computational Efficiency: The model is designed to be computationally efficient, allowing it to run on a variety of hardware platforms.

3. Enhanced Flexibility and Customizability: The model is highly flexible and customizable, allowing users to tailor it to their specific needs and applications.

4. Scalability: The model is designed to be highly scalable, allowing it to handle large volumes of video data and analyze it in real-time.

Benefits for Developers and Researchers

1. New Opportunities for Innovation: The new video model provides new opportunities for innovation and development, enabling researchers and developers to create new and innovative applications.

2. Improved Collaboration and Knowledge Sharing: The model enables improved collaboration and knowledge sharing among researchers and developers, allowing them to share and build upon each other’s work.

3. Accelerated Progress in Video Analysis: The model accelerates progress in video analysis, enabling researchers and developers to focus on more complex and challenging problems.

4. Increased Accessibility and Adoption: The model increases accessibility and adoption of video analysis technology, enabling a wider range of users to benefit from its capabilities.

Conclusion

Google DeepMind’s new video model offers several benefits that make it an attractive solution for various applications. The model’s improved accuracy, increased efficiency, and enhanced object detection and action recognition capabilities make it an ideal solution for video analysis tasks. The model’s technical benefits, including its advanced deep learning architecture, improved computational efficiency, and enhanced flexibility and customizability, make it a valuable tool for developers and researchers.